Lesson 1.11.2: Bias-Variance Tradeoff

The Bias-Variance Tradeoff is a core concept in machine learning that explains the balance between a model's simplicity and complexity.It is important to understand prediction errors (bias and variance) when it comes to accuracy in any machine-learning algorithm. There is a tradeoff between a model’s ability to minimize bias and variance which is referred to as the best solution for selecting a value of Regularization constant. A proper understanding of these errors would help to avoid the overfitting and underfitting of a data set while training the algorithm.

What is Bias?

The bias is known as the difference between the prediction of the values by the Machine Learning model and the correct value. Being high in biasing gives a large error in training as well as testing data. It recommended that an algorithm should always be low-biased to avoid the problem of underfitting. By high bias, the data predicted is in a straight line format, thus not fitting accurately in the data in the data set. Such fitting is known as the Underfitting of Data. This happens when the hypothesis is too simple or linear in nature. Refer to the graph given below for an example of such a situation.

- Here's just the training set the first machine learning algorithm that we will use is linear regression aka least squares linear regression. For Example: X-axis: Weight , Y-axis: Height

- It's a straight line to the training set note the straight line doesn't have the flexibility to accurately replicate the arc in the true relationship no matter how we try to fit the line it will never curve.

- Thus the straight line will never capture the true relationship between weight and height no matter how well we fit it to the training set the inability for a machine learning method like linear regression to capture the true relationship is called bias because the straight line can't be curved like the true relationship.

What is Variance?

The variability of model prediction for a given data point which tells us the spread of our data is called the variance of the model. The model with high variance has a very complex fit to the training data and thus is not able to fit accurately on the data which it hasn’t seen before. As a result, such models perform very well on training data but have high error rates on test data. When a model is high on variance, it is then said to as Overfitting of Data. Overfitting is fitting the training set accurately via complex curve and high order hypothesis but is not the solution as the error with unseen data is high. While training a data model variance should be kept low. The high variance data looks as follows.

- Another machine learning method might fit a squiggly line to the training set the squiggly line is super flexible and hugs the training set along the arc of the true relationship because the squiggly line can handle the arc in the true relationship between weight and height

- It has very little bias we can compare how well the straight line and the squiggly line fit the training set by calculating their sums of squares.

Bias Variance Tradeoff

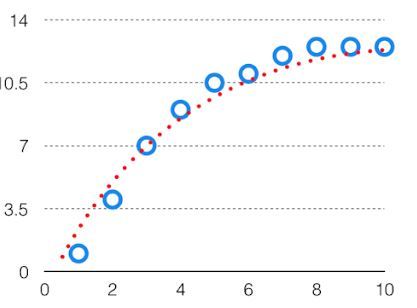

If the algorithm is too simple (hypothesis with linear equation) then it may be on high bias and low variance condition and thus is error-prone. If algorithms fit too complex (hypothesis with high degree equation) then it may be on high variance and low bias. In the latter condition, the new entries will not perform well. Well, there is something between both of these conditions, known as a Trade-off or Bias Variance Trade-off. This tradeoff in complexity is why there is a tradeoff between bias and variance. An algorithm can’t be more complex and less complex at the same time. For the graph, the perfect tradeoff will be like this.

- In the contest to see whether the straight line fits the training set better than the squiggly line, The squiggly line wins but remember so far we've only calculated the sums of squares for the training set, we also have a testing set.

- Now let's calculate the sums of squares for the testing set in the contest to see whether the straight line fits the testing set better than the squiggly line.

- The straight line wins even though the squiggly line did a great job fitting the training set it did a terrible job fitting the testing set.

- In machine learning lingo the difference in fits between data sets is called variance. The squiggly line has low bias since it is flexible and can adapt to the curve in the relationship between weight and height, but the squiggly line has high variability because it results in vastly different sums of squares for different data sets, In other words it's hard to predict how well the squiggly line will perform with future data sets. It might do well sometimes and other times it might do terribly.

- In contrast the straight line has relatively high bias since it cannot capture the curve in the relationship between weight and height but the straight line has relatively low variance because the sums of squares are very similar for different data sets in other words the straight line might only give good predictions and not great predictions but they will be consistently good predictions.

The Tradeoff in Action

Training vs. Testing Performance

- Straight Line (High Bias, Low Variance):

- Training Error: High (doesn’t fit well).

- Testing Error: Similar to training error (consistent but not great).

- Squiggly Line (Low Bias, High Variance):

- Training Error: Almost zero (fits perfectly).

- Testing Error: Very high (fails to generalize).

The "Sweet Spot"

- Goal: A model that balances bias and variance.

- Not too simple (avoid high bias).

- Not too complex (avoid high variance).

- Example: A moderately curved line (not straight, not too squiggly).

How to Fix Bias & Variance Issues?

| Problem | Solution |

|---|---|

| High Bias (Underfitting) | Use a more complex model (e.g., polynomial regression instead of linear). |

| High Variance (Overfitting) | Use regularization, cross-validation, or ensemble methods (bagging/boosting). |

Methods:

- Regularization (e.g., Lasso/Ridge Regression) → Penalizes complexity.

- Boosting (e.g., AdaBoost) → Combines weak learners to reduce bias.

- Bagging (e.g., Random Forest) → Reduces variance by averaging predictions.